Smarter Than Real: The Rise of Synthetic Data in AI

Why AI’s next leap forward depends on the data we invent, not just the data we collect.

Data is everywhere. It’s the fuel that powers artificial intelligence, the digital currency of the internet, and the foundation of every AI-driven product we use daily. Yet, despite its immense value, data is often seen as a bottleneck—messy, expensive, and full of pitfalls. Ughhh.

Many companies struggle with data-related challenges. We’ve all heard stories in the industry: “The project didn’t work out—they had issues with data.” It’s as if data were a minefield, something to be cautiously navigated rather than harnessed for innovation. But what if, instead of seeing data as a roadblock, we saw it as a launchpad?

That’s exactly what synthetic data is doing—turning AI development into a faster, smarter, and more reliable process. No more messy datasets or privacy concerns—just high-quality data designed to power innovation.

Artificial data is AI’s ideal training partner—designed for precision, free from human flaws, and limitless in scale. As AI expands what it means to be intelligent, its data must evolve beyond human limitations, too.

My 4 AM thought

What Is Synthetic Data? A Simple Breakdown

For a deeper dive into how synthetic data can be leveraged competitively, check out this resource: MIT Sloan: What is Synthetic Data and How Can It Help You Competitively?

Before we dive into why synthetic data is game-changing, let’s define it. Synthetic data is artificially generated information that mimics real-world data while avoiding its limitations. It’s not random noise—it follows the same statistical patterns and distributions as real data but is created rather than collected.

Unlike real-world data, which is often biased, incomplete, or entangled in privacy regulations, synthetic data can be designed to be fair, scalable, and free of sensitive information, making it an ideal training ground for AI models.

How AI Is Evolving with Artificially Generated Data

A few months ago, I watched a fascinating interview with Karina Nguyen, an AI researcher who worked at Anthropic (on Claude) and now at OpenAI (on ChatGPT). She described how synthetic data is becoming the backbone of AI development and shared key strategies for using it effectively. Check out the full conversation here: YouTube: Karina Nguyen on Synthetic Data.

She emphasized four crucial areas where synthetic data plays a role:

Balancing Conflicting Training Data – AI models are trained on massive datasets, which often contain contradictory information. Synthetic data helps mitigate these inconsistencies and ensure models learn in a structured way.

Enabling Faster Iteration – Unlike real-world data, which takes time to collect and clean, synthetic data can be generated instantly, allowing AI teams to test and refine their models rapidly.

Managing Model Behavior Across Diverse Scenarios – AI models must perform well across a wide range of situations. Synthetic data allows teams to simulate edge cases and rare events that wouldn’t naturally appear in a dataset.

Building Robust Evaluation Frameworks – The ability to measure and assess AI performance is crucial. Synthetic data provides a controlled environment for rigorous testing and fine-tuning.

The biggest AI companies in the world are already leveraging these techniques. But the potential extends far beyond OpenAI, Anthropic, or Google DeepMind—startups and mid-sized businesses can also benefit enormously.

It’s Not Fake — It’s Smarter

Transparency in AI training data is crucial. Learn more about efforts to make data used in AI more transparent here: MIT Sloan: Bringing Transparency to Data Used to Train AI

The term synthetic might make it sound artificial or unreliable, but the reality is quite the opposite. In many cases, synthetic data is cleaner and more accurate than real-world data.

Just think about it: real-world data is messy and full of biases, errors, and inconsistencies. It’s created by people, meaning it’s prone to mistakes, misinformation, and unintended skewing of facts. Researchers have found that models trained on curated synthetic data tend to perform better and make fewer mistakes than those trained on raw internet data.

Additionally, synthetic data solves one of the biggest problems in AI ethics: bias. Since it can be carefully designed and adjusted, it helps create fairer models by eliminating discrimination baked into real-world datasets.

How Artificial Data Is Reshaping AI Products

Many businesses are just starting to recognize the power of synthetic data. But for those willing to invest in this approach, the benefits are game-changing:

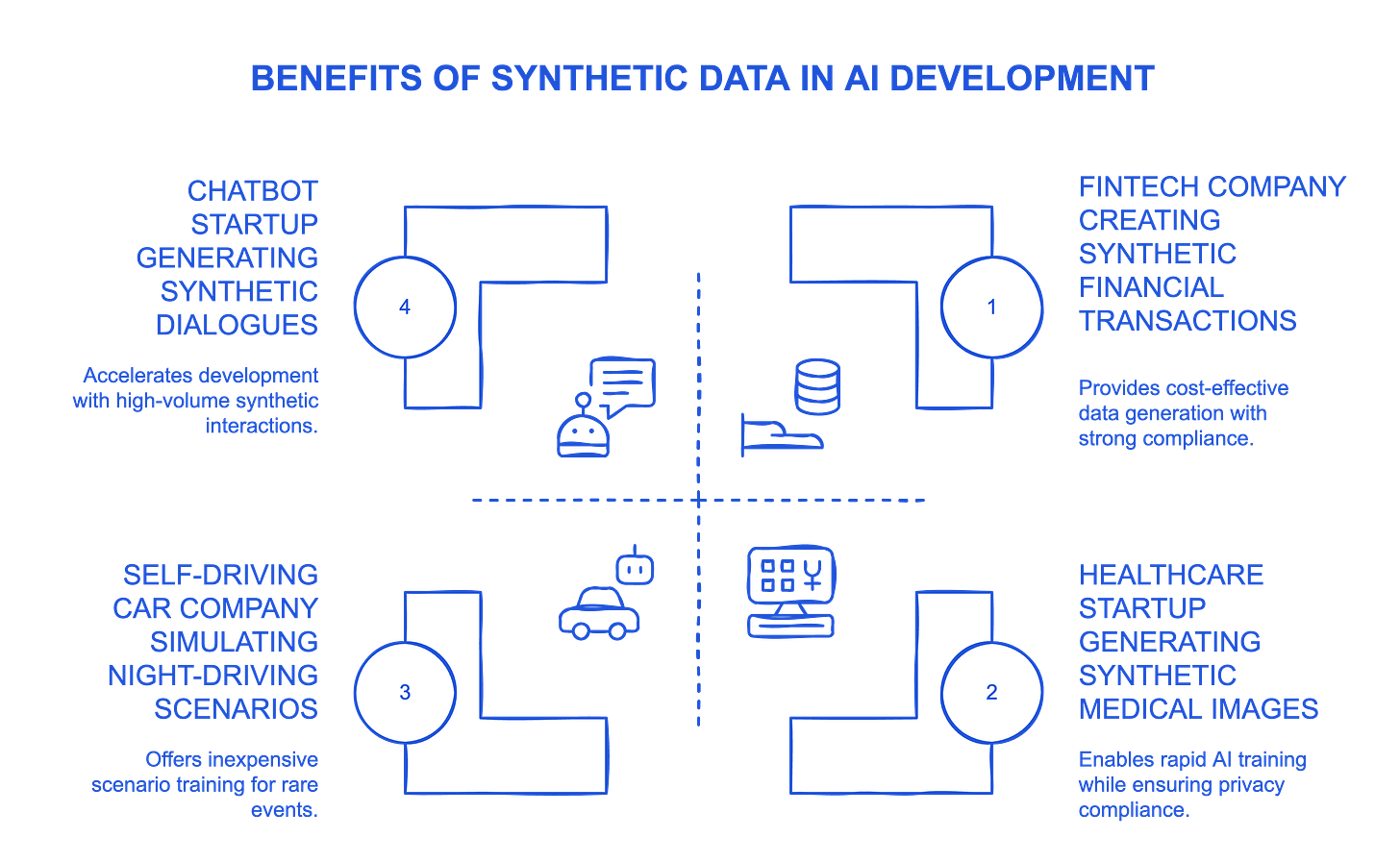

1. No More Data Shortages

Building AI products often requires millions of data points. Collecting, cleaning, and labeling this data is a slow, expensive process. Synthetic data removes these barriers by allowing companies to generate high-quality training data instantly.

Example: A healthcare startup developing an AI-powered diagnostic tool struggles to access patient data due to privacy regulations. Instead, they generate synthetic medical images, allowing them to train their model without legal concerns.

2. Privacy and Compliance made Easy

With regulations like GDPR, HIPAA, and CCPA tightening around personal data usage, companies are searching for solutions that keep them compliant. Since synthetic data contains no real-world user information, it offers a way to train AI without breaching privacy laws.

Example: A fintech company needs transaction data to train its fraud detection AI, but can’t legally use customer records. By generating synthetic financial transactions, they bypass these restrictions while maintaining accuracy.

3. Training AI for the Unexpected

AI models need to be prepared for unpredictable situations, from rare customer behaviors to cybersecurity threats. With synthetic data, companies can create custom scenarios that may not exist in real datasets, making their models more resilient.

Example: A self-driving car company generates synthetic night-driving scenarios to train its AI on conditions that would be difficult (and dangerous) to replicate in real life.

4. Cheaper, Faster AI Development

Traditional AI training relies on collecting vast amounts of real-world data, which requires expensive storage, processing power, and human annotation. Synthetic data dramatically reduces these costs while speeding up the iteration process.

Example: A chatbot startup needs vast conversational data to improve its AI assistant but lacks enough real customer interactions. Instead, they generate synthetic dialogues to enhance the model’s understanding of natural language.

The Future of AI Is Synthetic

The power of synthetic data isn’t just for big tech companies. Today, anyone—whether you're a researcher, startup founder, or developer—can generate high-quality synthetic datasets using accessible tools. Platforms like Hugging Face’s Synthetic Data Generator make it easier than ever to create and fine-tune datasets tailored to specific AI training needs. Learn more here: Hugging Face: Synthetic Data Generator.

While synthetic data is a powerful tool, it’s not a universal substitute for real-world data. Poorly generated synthetic data can introduce biases, lack the complexity needed for high-stakes applications, or fail to capture unpredictable real-world variations. In industries like finance and healthcare, where precision is critical, synthetic data should be used alongside real-world data for validation.

The AI landscape is evolving fast, and synthetic data is at the heart of its next leap forward. Companies that embrace this shift will gain a competitive edge—building more accurate, scalable, and ethically responsible AI products.

While the biggest tech giants are already using synthetic data, the opportunity is just as promising for startups, scale-ups, and businesses looking to leverage AI in their products.

If you’re developing AI-driven solutions, the real question isn’t whether synthetic data will be useful—it’s how soon you can start using it.

Got thoughts, questions, or just want to chat? My DMs are always open—let’s connect!

Synthetic Data sounds like an interesting and useful concept, but it's important to remember that those "biases" and "inconsistencies" that it tries to remove, can be exactly what makes such data "real".

Why synthetic diamonds are cheap and natural ones are expensive?

The beauty of the natural diamonds come from the impurities present in the carbon crystal, that won't happen in the synthetic one (too "perfect" because it is created by deposition).

If you are creating a new type of nuclear weapons, the simulations (synthetic) can only take you so far... but at some point you need to produce a real detonation to get the real data that your simulations couldn't predict would happen.

My take is: using synthetic data needs to be intertwined with the use of real data, or we risk creating models that will be dangerously blind to real situations our normalized data cannot predict.

Thanks for sharing your thoughts, Alfredo! You make a compelling point—and the diamond analogy is a powerful one. I do think there's truth in what you're saying: some of the messiness in real-world data holds, meaning that synthetic data might smooth over too much. Those imperfections can carry important context, especially in edge cases.

That said, I’d push back a little. Not all bias or inconsistency in real data is valuable—some of it reflects systemic issues, poor measurement, or unbalanced sampling that skews models in ways we may not want. In those cases, replicating that “reality” isn’t always the right goal.

I agree that synthetic data isn’t a silver bullet, and models built on it alone risk being disconnected from the world they’re meant to operate in. But I’m not sure the answer is just to preserve all the noise in real data, either. It might be that the real challenge is learning to tell the difference between a meaningful imperfection and a harmful one...